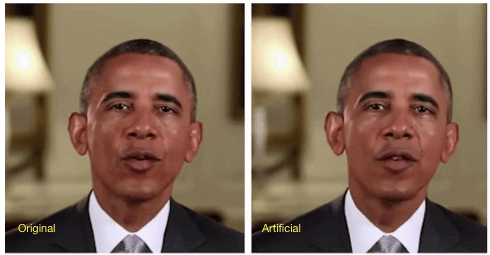

It’s no surprise that artificial intelligence has entered the mainstream. The need for specialized skills or software previously required to manipulate and generate lifelike multimedia content such as photos or videos has been virtually eliminated. Known as deepfakes, these materials encompass audiovisual content that mimics the appearance or voice of individuals or events with high realism. Deepfakes have been used as a tool to manipulate political outcomes (such as a fabricated call from fake Joe Biden discouraging voters in New Hampshire from participating in the primary), create non-consensual deepfake pornography (in which pop star Taylor Swift was recently a victim), and pose as business leaders with an important announcement (Mark Zuckerberg announcing a change at Meta). The emergence of deepfakes presents a significant challenge in courtrooms, where the authenticity of evidence is key.

Source: https://chameleonassociates.com/why-you-should-know-about-deepfake/

Deepfakes are presented in court in one of two ways: a) as the subject of a crime or b) used as evidence to support a claim. The latter of these two is particularly alarming, as deepfakes have the potential to corrupt the evidence in almost any case that relies on digital or audiovisual material, posing a serious risk to the fairness of legal processes.

The main problems introduced by deepfakes in the courtroom are:

1. Follow Federal Rules of Evidence 902(13)

FRE 902(13) states that an item is considered self-authenticating if: (13) Certified Records Generated by an Electronic Process or System. A record generated by an electronic process or system that produces an accurate result, as shown by a certification of a qualified person that complies with the certification requirements of Rule 902(11) or (12). The proponent must also meet the notice requirements of Rule 902(11).

2. Use a Self-Authenticating Tool for Collection

Using a legal-grade tool such as Page Vault, that is considered a self-authenticating tool under FRE 902(13), can minimize the risk of challenges to the inauthenticity of the evidence presented.

3. Maintain chain of custody properly

Properly documented chain of custody establishes that: 1) when the record was originally produced, it accurately recorded the webpage in question, and 2) the record was not subject to alteration from the point of collection until presentation in court

4. Have an expert on the technology prepared

When presenting evidence or challenging evidence, be sure to have an expert witness available to answer technological questions on the evidence presented or being challenged.

5. Review admitted evidence in detail prior to trial

FRE 902 (11) states that, “...Before the trial or hearing, the proponent must give an adverse party reasonable written notice of the intent to offer the record — and must make the record and certification available for inspection — so that the party has a fair opportunity to challenge them.”

During this review, look for obvious signs of manipulation (i.e. asymmetries, mismatched lip movement, unnatural coloring). Leveraging deepfake detector tools during this process can help. If you suspect an image, video, or audio file is fake, consult an expert.

The emergence of deepfakes challenges the legal system's ability to authenticate evidence and maintain integrity, necessitating updates to the Federal Rules of Evidence and the adoption of best practices for evidence management. As the legal community grapples with these technological advancements, firms should adopt a strategic approach that includes the use of self-authenticating tools, expert testimonies, and a meticulous chain of custody.